Hard Edges

Practical Domain-Driven Design using C#

Introduction

What's the big idea?

Domain-Driven Design is all about being explicit about how your object model functions. To this end, you need to reason in terms of hard edges around the various bits. Defining those bits can be tricky, but I hope that with the help of this guide things may be somewhat simpler or, at least, get you moving in a sensible direction.

I will be focusing on practical examples and scenarios that you may run into in your day-to-day modeling and the techniques you may wish to consider in order to implement a pragmatic solution. I will be covering some of the basic concepts of Domain-Driven Design, but there is ample literature on the matter, so I'll be focusing on where the rubber meets the road. Feel free to skip over the concepts if you are comfortable in your understanding thereof.

We need to correctly divide up our domain in order to find the correct structure. This will require constant refactoring as we gain a better understanding of the domain. We need to constantly analyze our model to break down concepts into simpler building blocks and then synthesize them into more meaningful structures. This is something that is an iterative and ongoing exercise.

Remember that at the end of the day we are after a fluid and expressive domain model that will enable us to incorporate changes quicker and focus on the complexity within the domain. Always keep the end user in mind as this is going to be someone who may very well spend their entire working day using a system that you built. There is a great deal of satisfaction to be gained from providing a useful and usable system to one's users.

Target Audience

This guidance is aimed at those software developers who would like additional resources to aid them in their modeling efforts as well as anyone interested in, or using, Domain-Driven Design. However, please keep in mind that this is my current opinion on how to tackle the identified issues. You should not substitute it for your own, and you do not need to believe everything I say. You may have more experience dealing with a particular problem and, as such, your solution may very well be a better fit. Please do let me know if you have any ideas on improving the guidance. If we get stuck on a particular way of thinking, we'll be just that: stuck.

I will be covering some of the Domain-Driven Design concepts very briefly, so if you are already familiar with those, then feel free to skip over the relevant sections.

About The Author

Eben Roux has over 20 years of experience in the professional arena as a developer, consultant, and architect within many industries and has provided strategies and solutions that have contributed to the successful implementation of various systems. He believes firmly in the development of quality software that empowers users to get their job done.

His current focus is on domain-driven design implemented within an event-driven architecture based on message-oriented middleware.

"I'd like to thank my wife Amanda and my two wonderful boys Reynard and Reynier for their love and support in allowing me the time and patience to write this guide in aid of the developer community, if only in a small way"

Concepts

Why is Domain-Driven Design so hard?

At first, Domain-Driven Design may seem like a daunting experience, and you may be wondering about the following:

- Where do I start?

- Is Domain-Driven Design the right fit for my system?

- Would a simple CRUD solution not be simpler?

- Why is it so difficult to identify the concepts?

These are all valid questions and can be widened to include most aspects of design, not just in the computer realm.

An aspect that most people do not consider when it comes to programming in general is that there is quite a bit of creativity involved, and creativity is something that is all but impossible to learn. Creativity appears to come to the fore when we are faced with an indeterminate number of possibilities. This leads to one design not necessarily being better or worse than the next but rather just different.

There are different styles of music, and each person has a general type of music they prefer. Some prefer many different styles, and within a particular genre of music there are also certain musicians or bands that one may prefer over others. Does this mean one genre is better or worse than the next? Most certainly not.

Is it possible to learn how to be a great musician? One can learn all the basics of music and all the notes, scales, and chords. There is no variance when it comes to this scientific or fixed part of the music creation process. However, someone may be an excellent singer, pianist, or guitarist, but without something original to rise above the rest you can only reproduce existing music.

There are an infinite number of combinations of notes to produce music, as there are an infinite number of combinations of letters and words to create stories, as there are an infinite number of combinations of paint and color to produce artwork.

This is also true for any computer system design. There are certain structures (conditionals, loops, classes, etc.) that exist in any computer language, and most programmers can pick up those and be proficient in a matter of months. However, there are an infinite number of ways in which we can put these structures together to design a solution. This is the "difficult" part.

This is where design patterns and perhaps some practical guidance such as this book can assist by making some concepts a bit more concrete, and once we understand these concepts, we can reason about a solution in a simpler way.

Object orientation is only one style of programming, and if someone is not comfortable with this form of design, then it certainly is possible to provide a solution using a different mechanism. Object orientation brings its own kind of magic to software solutions, and I'm sure many proponents of other approaches will say the same in respect of their approach. Use what you need and what you are comfortable with. Objects have not failed me yet and are flexible enough to provide solutions for any level of complexity.

It is not that Domain-Driven Design is difficult in and of itself, but rather that design in general is tricky, and it involves a lot of trial and error at the best of times. Keep refactoring and try different approaches until you hit something that feels right and that expresses the concepts in a fluid way.

Cohesion and Coupling

We have all heard that we need low coupling and high cohesion when it comes to software design in general. Although we know what this means, it can get rather tricky to identify when we are going off track.

Cohesion

Cohesion relates quite nicely to Robert C. Martin's Single Responsibility Principle that forms part of his SOLID principles. We should only place functionality together, typically in a class, when it all belongs together. No definitive list or set of rules will be able to identify all the moving parts that do belong together, but if you feel that some parts can be split out without affecting how the class behaves, then it may be a candidate for a different class. If you find that you need to re-use a bit of functionality contained in another class, then perhaps that bit of functionality is a candidate to be extracted.

Coupling

Again, Robert C. Martin's Dependency Inversion Principle comes into play here. If we can rely on an interface contract as opposed to an actual implementation, then things start going better already. However, this does not mean that all classes have to be an implementation of an interface. Many domain classes require only state-based interactions and, as such, an interface would not add any value. An interface is most useful when abstracting behavior such as one would require in repositories or services.

Another coupling scenario is where relationships are represented in an object model. When it comes to traditional database Entity-Relationship modeling the fact that there are relationships between database entities tends to create a situation where it is too easy to follow the relations as far as they go; and sometimes they could go in circles. This is another area discussed in the guide where we need to create a hard edge around our aggregates.

Behavioural Coupling

When one component has to know how another component functions there exists a high degree of behavioural coupling. This should be avoided where possible. It is certainly in order to issue a command to another component, and in such scenarios the behavioural coupling cannot be avoided. However, notifications should only be concerned with the behavior and data related directly to the component doing the notifying. For instance, in a message-based solution we may have an endpoint that can send e-mails. Sending a command to that endpoint requires us to, at the very least, know that the endpoint can actually accept and, ultimately, process the command we are sending. Once the e-mail sender endpoint has completed its processing, it should publish an event to notify any endpoint that subscribes to the event. This subscription mechanism should be dealt with by an infrastructure component so that the component doing the notifying need not be concerned with any of the subscribers. In this way we have removed all behavioural coupling between the components, as the publisher has absolutely no interest in what a subscriber will do with its copy of the event.

Temporal Coupling

When I pick up my phone and I place a call and no one answers the call, I simply have to remember to call again. This is high temporal coupling since I have to rely on the person on the other end to be available to answer my call at a specific time. On the other hand, when I send someone an e-mail I feel quite comfortable that they will receive it in due course and respond if required. This is low temporal coupling since the e-mail can be sent irrespective of whether the recipient is available or not.

Business Terminology

It may be worthwhile mentioning that there is a difference between the business terminology and how it maps to the strategic patterns in Domain-Driven Design. There isn't necessarily always a one-to-one mapping between these concepts, and we are going to have scenarios where the technical approach has to remain in the technical solution space; the business folks do not have to know about these.

Domain

The domain is the total area of focus for our model. Irrespective of the size of the domain, you are in all likelihood going to run into a situation where different parts of your domain model will belong together. As with most things, we will divide and conquer.

Although the following divisions in the domain are given specific names based on how you view them, it is largely academic. We shouldn't need to split hairs over this, and if you start off grouping the domain into logical units and calling each a subdomain then that is absolutely fine for a start.

Core Domain

This is the main part of your domain and carries the most weight. In terms of stock market parlance, this falls right in the headline earnings space. It is the raison d'être of your domain. This part has to work and has to work well and is that part of your system that everything revolves around. If you have a hard time nailing this down, it means that you have a rather large core domain. It is what it is.

Generic Subdomain

These aid in performing certain tasks in a generic way, and the implementation details are not that important to our core domain. Financial accounting and calculation engines could be bought off the shelf and be used to perform these functions.

Supporting Subdomain

I think there is often confusion between generic and supporting subdomains. A supporting subdomain isn't something that is generic enough that you would probably find anything off the shelf available. It would be quite specific to your business requirements but still does not fall into the core of the business.

Technical Terminology

Bounded Contexts

When you consider your business domain as a whole, it may be somewhat overwhelming. Not only that, but it may be quite difficult to reason about the various parts given that the behavior of various classes may seem to change depending on how you interact with them.

It is for this reason that Domain-Driven Design has the concept of a Bounded Context where you can group together functionality based on the area they are related to. Typically, you would need a Domain Expert to identify these since we, as developers, hardly ever have in-depth knowledge of any particular domain. As your understanding and knowledge of a particular domain grows, you will find that you can refine your design.

Having a Bounded Context at a granularity that is too fine or focused can also lead to problems. You should most definitely not have a single Aggregate Root represent an entire Bounded Context.

Sometimes you may run into a situation where the exact same concept appears in more than one Bounded Context. In most cases this indicates that your Bounded Context is too finely divided. However, keep in mind that it is entirely possible that you need to use the same concept across Bounded Contexts. If this happens, you either need to use another Bounded Context to represent that concept or you may need to use a Shared Kernel. It is also entirely possible that you may need to represent an Aggregate Root from an upstream Bounded Context in your downstream Bounded Context. In such a case you will represent it as a Value Object.

Stadium example

To make the concept of a Bounded Context clearer, we could take seats in a stadium as an example. A stadium contains seats, but the same seat represents different things to different people.

Financial Management

In most scenarios one would have to keep an asset register which is typically owned by the financial folks since they are the system of record for any assets. The finance department would be interested in data for an Asset such as:

- asset number

- asset type

- date of purchase

- cost

- method of depreciation

- date commissioned

- date decommissioned

They would also be interested in the following behavior:

- Commission()

- Depreciate()

- Decommission()

Maintenance Management

The maintenance department would be interested in data for a MaintenanceItem such as:

- next maintenance date

- maintenance schedules

The behavior for them would be along the lines of:

- ScheduleMaintenance()

- RegisterMaintenanceSchedule()

Event Management

The event booking folks would have data for a BookableItem like this:

- event name

- event date

- seat number(s)

And the relevant behavior would include:

- RegisterEvent()

- SellTicket()

Putting it all together

As we can see, even though a particular seat is a single physical thing in the real world, it can mean very different things to different people. This is where a Bounded Context comes in handy. You may even find that a similar concept is called something else in each context. You may find that an Employee in the HR Bounded Context is called a User in the Identity & Access Control Bounded Context, or an Author in the Collaboration Bounded Context.

As behavior is invoked on various objects within each Bounded Context, the other Bounded Contexts may need to be informed. To accomplish this, one would need some communication mechanism based on an Event Driven Architecture. That is, of course, where a service bus, such as Shuttle.Esb, would come in handy.

The Financial Management Bounded Context could publish an AssetRegisteredEvent, and the other Bounded Contexts would subscribe to that event and then determine if they need to register the asset number as an item they are interested in.

When the Maintenance Management Bounded Context removes a seat for maintenance, it would publish an \linebreakItemRemovedForMaintenanceEvent that the Booking Management Bounded Context would subscribe to in order to exclude the seat as a bookable item for any events until the item is made available again. Any EventBooking that has this seat allocated to it may need to kick off some business process to inform the customer about the change and then go about rectifying the situation.

Canonical Models

All this is quite different and in sharp contrast to what many enterprise-level architects refer to as a canonical data model. For our scenario a canonical model would need to include all the data from the various Bounded Contexts. This is quite cumbersome and really does not add any real value. Canonical Models tend to be so all-inclusive that just about everything ends up being optional data, and each system interacting with the data would need various validation approaches to ensure that the data is correct.

From a Domain-Driven Design perspective, we would want those rules, or invariants, as part of our domain code.

Shared Kernel

You may find some concepts in your domain that truly do cross over between Bounded Contexts. These will usually take the form of Value Objects and other infrastructure-type classes. You can package these separately and safely share the assembly among your Bounded Contexts.

Ubiquitous Language

The Ubiquitous Language represents an agreed-upon set of terms that are specific to a Bounded Context and keeps everyone concerned with the development of the Bounded Context on the same page, but it may be just as tricky to define what is regarded as belonging in the Ubiquitous Language.

What I have come across is that we may distill certain concepts into more generic artifacts within the domain model that a Domain Expert may not necessarily be aware of and that there would be no point in even discussing these with the Domain Expert as it may muddy the waters. This is the same reason we should not refer to concepts in terms of database structures or use other technical concepts and terms. We would also exclude all infrastructure components such as SqlConnection and the like.

For instance, if a Domain Expert always only refers to a Bank Account and the client's Investment Account, we could quite possibly come up with some type of common Account structure that accommodates both scenarios. You may be thinking that we could just use inheritance and stick with the original terms, but we will be introducing more into our domain model than is required since we would need to implement the associated Repository classes also. In the given scenario of only two options, it may not seem like a big deal, but when we run into tens or hundreds, then it becomes almost certain that we need a common implementation.

There may be times when it may be appropriate to discuss the new concept as it may be a business concept that may have been missed, but if you find that it is more of a technical solution to create a more expressive domain, then you may want to exclude it from the Ubiquitous Language.

As developers, one of our main functions is to identify patterns and distill those into the required types. We may be working on an insurance claim system, and our Domain Expert may refer to many different claim types and mention that each has a set of rules and behavior that appear specific to that claim type. Whenever we approach this, we need to find the lowest common denominator and work from there in order to find the reusable parts. We most certainly do not want to start by creating an Aggregate Root for each available claim that can be registered.

Reachability / Reusability

When we have a bit of code, we always need some way to invoke that functionality. We need some way of reaching that code. Here I'm not referring to a user invoking the functionality but rather the code sitting behind that invocation is what I'm referring to.

We should be aiming for reusability here in an Open/Closed Principle sense. Referencing functionality through an assembly or some proxy (over the wire) is the go-to method.

The more reusable the option, the more design is involved in order to find the balance between reusability and business functionality.

Copy & Paste

This is the most simplistic way to invoke functionality that we require. It is also the worst way one could go about doing so. We have a couple of lines of code, or a method, and we simply copy what we need and paste it in its new home. The issues with this are well documented, and it leads to a maintenance nightmare.

Referencing Source Code

We could make use of an existing source code file and invoke a method in that file. This is not ideal, but a step in the right direction.

Referencing an Assembly

Once we have a cohesive grouping in our functionality, we could package that as an assembly and reference the assembly in any subsequent usage scenarios. We can then invoke the relevant functionality by making use of the code exposed by the assembly. Package managers such as Nuget are quite useful in such scenarios.

Over The Wire

Another way to get to functionality is by invoking it over the wire. We can connect to the relevant endpoint using an exposed protocol and then make the required call. This could be anything from a RESTful API, a Web Service, RPC, or even using a queue.

Practical Guidance

Bounded Context Granularity

Finding the correct granularity for a Bounded Context is quite important. If you find yourself considering making each Aggregate Root a Bounded Context in and of itself, then stop. This is much too fine grained and will end up being very confusing. If you have started down this path, you are going to have to group your Aggregate Roots into something that is more sensible.

Repositories

Repository A seems to require Repository B

This may be a case of Entity B being part of an Aggregate Root A. Creating an aggregate that contains the entity from Repository B may be the answer. Sometimes things are not as clear-cut as that, though.

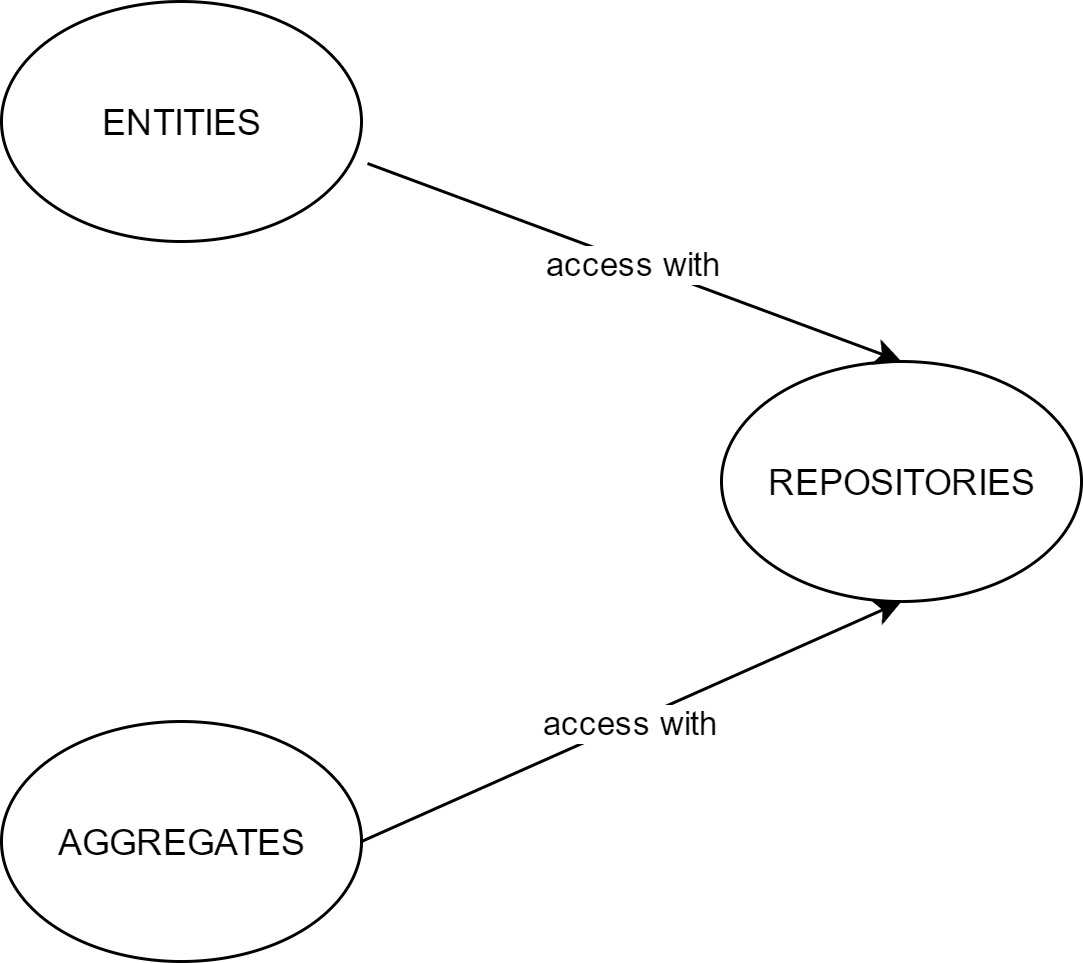

Since object orientation facilitates reuse, it is quite possible that we have some generic entity that we reuse between aggregates. In the original work by Eric Evans[1] on one of the very first pages, we have an image that contains the following relationships:

Minimal interface

Although the idea behind a repository is that it represents an in-memory collection, we should avoid returning lists of Aggregate Roots. To this end, we should aim to use the following methods on a repository:

public interface IAggregateRootRepository

{

AggregateRoot Get(Guid id);

void Save(AggregateRoot instance);

// not this:

IEnumerable<AggregateRoot> ForSomethingElse(Guid id);

}If you do find yourself having a method in your repository that returns a collection of Aggregate Roots, you may need to rethink your design. The domain is concerned with commands performed on it and should not be used for querying. You also very rarely need to alter more than one Aggregate Root within the same operation or transaction.

Repository is responsible for saving Value Objects, even if shared

A repository implementation is responsible for saving any Value Objects that are part of the Aggregate Root. You should never create a repository for a Value Object.

Generic IRepository

There typically should be no need for a generic IRepository interface as you are going to need to get to each of your specific interfaces anyway. In some cases, it may be useful when you want to use some decorator functionality such as caching, but you still need to consider this carefully. Applying our guidelines around repository interfaces, we could have something along the lines of this:

public interface IRepositoryGet<TId, TAggregate>

{

TAggregate Get(Tid id);

}

public interface IRepositorySave<TAggregate>

{

void Save(TAggregate instance);

}

public interface IRepository: IRepositoryGet, IRepositorySave

{

}If you do find that you need to implement some specific decorator functionality, it may be worthwhile creating a role-specific interface such as ICachingRepository to make the intent clearer.

Aggregates

Aggregate Roots should be one level deep

If you decide to not follow this guideline, you will need to carefully consider why you are doing so. To understand why I say this, we need to take a look at why one class depends on another in the first place. There are two reasons.

Ownership

An example of an ownership relationship is a Customer having a related collection of Order objects. Ownership is only ever between Aggregate Roots. One Aggregate Root may never contain or hold a reference to another Aggregate Root. There is nothing wrong in passing one Aggregate Root to another as a transient reference in order for the other Aggregate Root to perform some action. In order to break any direct reference, there is typically only an Id to the related Aggregate Root, or you could make use of a Value Object to represent the relationship.

In the case of an Order one would usually store only the CustomerId. However, one of the reasons an Order can stand alone is that it is an Aggregate Root and, as such, should one ever delete the associated customer, the order would not necessarily be deleted. The order has a lifetime of its own. To that end, one could create an order without a real customer. Think of this in terms of a physical order book. You pick up a pen and write the details into the book along with the items ordered. If you recognize your customer or they give you their customer number, you could add that as a reference. A new customer that is only going to buy something from you once does not get a customer number, and you only record the bare minimum. This is along the same lines as having an account with a clothing store. Some people buy cash and others buy on account or use a loyalty card. All the sales can be processed whether or not it is a known customer or a cash sale.

Therefore, we could denormalize the customer data into a Value Object that contains an optional CustomerId. This could be stored as a nullable foreign key in our data store.

Containment

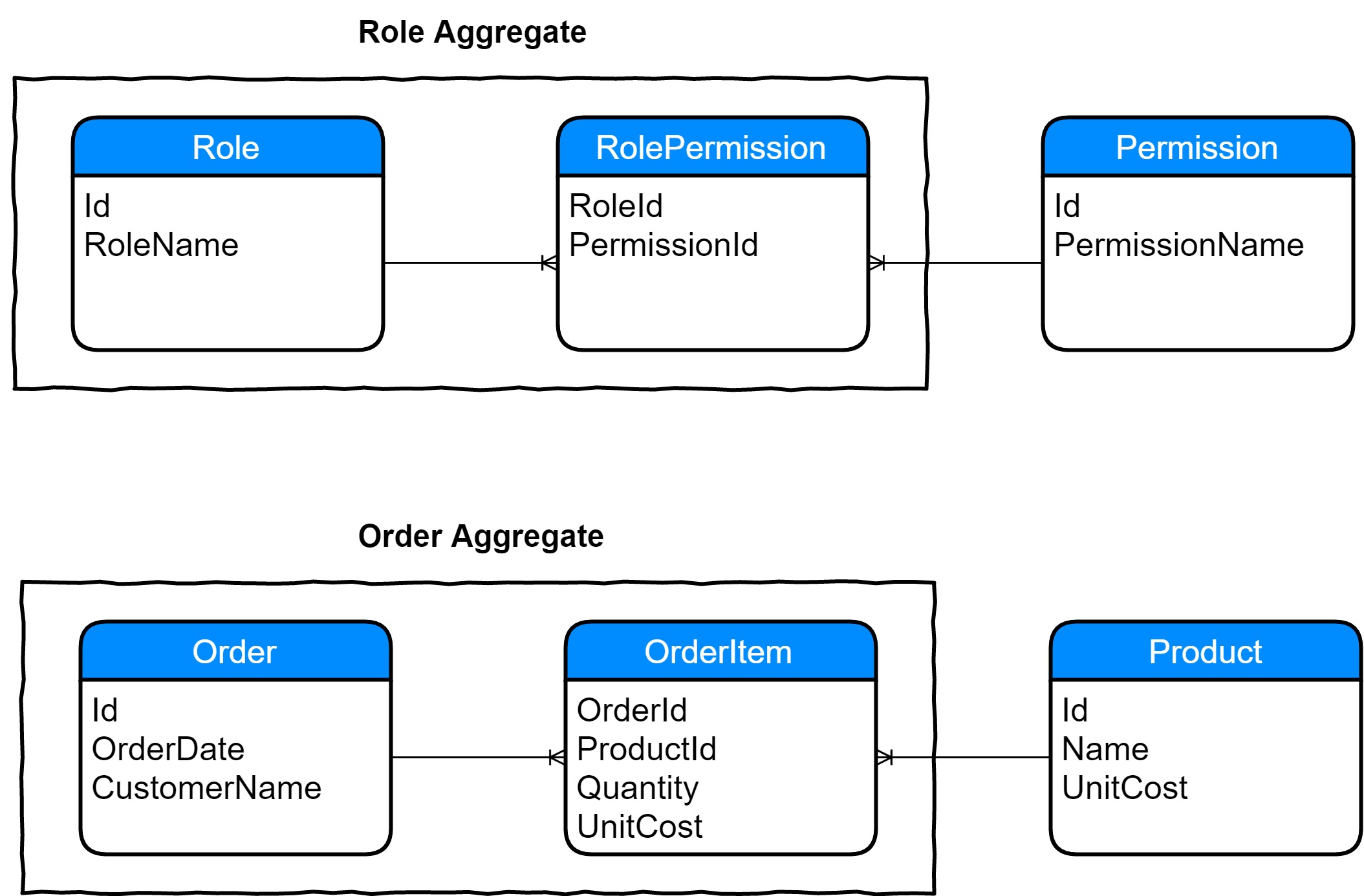

These Entities or Value Objects typically have no reason to exist on their own, and you'll find that they also usually represent an associative entity in terms of Entity Relationship Modeling:

In such a case, we'll pick one of the sides as the primary owner. An OrderItem is really an associative entity for the Order/Product relationship. Now, if an Order should ever be deleted, the OrderItem associations should also be deleted as it has no reason to exist without the associated Order. If the Role is ever deleted, all the associated RolePermission associations should also be deleted. An associative entity may also contain any additional data pertaining to the relationship.

Most many-to-many associations will have a stronger affinity with one of the two sides. It may feel as though you need to have the identifier of the other Aggregate Root in each. For instance, when we take something like a Job and Board, would we have a Job contain a list of Board identifiers or a Board contain a list of Job identifiers? Perhaps the link is so significant that we use a JobBoard Value Object or even create it as an Aggregate Root and use the identifiers on one of the ends. Since I'm sure many of us haven't really considered a scenario like this, it may require us to give it quite a bit of thought and discuss this with our Domain Expert.

Most of us have come across an Order with its OrderItem collection. But if you think about it, we actually have a many-to-many relationship here between Order and Product. We typically don't call the relationship OrderProduct even though in a purely technical sense we could. We usually know that the items are part of an order and make up an aggregate. This is the level of comfort we should strive for in our domain modeling. Given enough time and experience, we usually get there, but it may take a couple of modeling changes to get there. Always aim to break down associations to a one-to-one or one-to-many structure.

Whenever one ClassA's lifetime is dependent on the lifetime of ClassB, we can safely say that ClassB is the Aggregate Root of ClassA.

Example of deep hierarchy

An Aggregate Root is used to represent a consistency boundary. As such, it may be necessary to break up a graph that goes too deep. However, you may find situations where the consistency boundaries seem to overlap.

Consider the following structure:

public class Project

{

private readonly List<ProjectItem> _items = new List<ProjectItem>();

public Project(string name, decimal budgetedCost)

{

Name = name;

BudgetedCost = budgetedCost;

}

public string Name { get; private set; }

public decimal BudgetedCost { get; }

public bool Started { get; private set; }

public decimal TotalItemCost()

{

return _items.Sum(item => item.Cost);

}

public void OnAddItem(ProjectItem item)

{

_items.Add(item);

}

public void Start()

{

var totalItemCost = TotalItemCost();

if (totalItemCost != BudgetedCost)

{

throw new DomainException($"The total item cost of '{totalItemCost:N}' does not equal the project budgeted cost of '{BudgetedCost:N}'.");

}

Started = true;

}

}

public class ProjectItem

{

private readonly List<Task> _tasks = new List<Task>();

public ProjectItem(string name, int cost)

{

Name = name;

Cost = cost;

}

public string Name { get; private set; }

public int Cost { get; private set; }

public bool IsAllocationComplete { get; private set; }

public decimal TotalTaskAllocation()

{

return _tasks.Sum(task => task.AllocatedPercentage);

}

public void OnAddTask(Task task)

{

_tasks.Add(task);

}

public void AllocationComplete()

{

if (TotalTaskAllocation() != 100)

{

throw new DomainException("All task allocations should add up to 100%.");

}

IsAllocationComplete = true;

}

}

public class Task

{

public Task(string name, decimal allocatedPercentage)

{

Name = name;

AllocatedPercentage = allocatedPercentage;

}

public string Name { get; private set; }

public decimal AllocatedPercentage { get; private set; }

}In this scenario, a Project cannot be started if the BudgetedCost has not been utilized fully, and the ProjectItem cannot have its allocation completed until the total cost percentage has been allocated to tasks. This means that a Project can also not be started if all the ProjectItem entries do not have their IsAllocationComplete set to true.

However, this structure is too deep, and when we Get the Project from the ProjectRepository, we will need to load the ProjectItem collection related to the project as well as the Task collection for each ProjectItem.

Given our guideline that an object graph should be 1 layer deep and how and why objects are related, it means that Project is the Aggregate Root of ProjectItem and that ProjectItem is the Aggregate Root of Task. This means that ProjectItem cannot be contained in Project as one Aggregate Root should not hold a reference, other than transient, to another Aggregate Root.

We can break this up using the following structure:

public class Project

{

private readonly List<ProjectItemAssocition> _items = new List<ProjectItemAssocition>();

public Project(string name, decimal budgetedCost)

{

Name = name;

BudgetedCost = budgetedCost;

}

public string Name { get; private set; }

public decimal BudgetedCost { get; }

public bool Started { get; private set; }

public decimal TotalItemCost()

{

return _items.Sum(item => item.Cost);

}

public void OnAddItem(ProjectItemAssocition item)

{

_items.Add(item);

}

public void Start()

{

var totalItemCost = TotalItemCost();

if (totalItemCost != BudgetedCost)

{

throw new DomainException($"The total item cost of '{totalItemCost:N}' does not equal the project budgeted cost of '{BudgetedCost:N}'.");

}

Started = true;

}

}

public class ProjectItemAssocition

{

public ProjectItemAssocition(string name, decimal cost)

{

Name = name;

Cost = cost;

}

public string Name { get; private set; }

public decimal Cost { get; private set; }

}

public class ProjectItem

{

private readonly List<Task> _tasks = new List<Task>();

public ProjectItem(string name, decimal cost)

{

Name = name;

Cost = cost;

}

public string Name { get; private set; }

public decimal Cost { get; private set; }

public bool IsAllocationComplete { get; private set; }

public decimal TotalTaskAllocation()

{

return _tasks.Sum(task => task.AllocatedPercentage);

}

public void OnAddTask(Task task)

{

_tasks.Add(task);

}

public void AllocationComplete()

{

if (TotalTaskAllocation() != 100)

{

throw new DomainException("All task allocations should add up to 100%.");

}

IsAllocationComplete = true;

}

}

public class Task

{

public Task(string name, decimal allocatedPercentage)

{

Name = name;

AllocatedPercentage = allocatedPercentage;

}

public string Name { get; private set; }

public decimal AllocatedPercentage { get; private set; }

}By adding a single layer, we have added a hard edge to our Project Aggregate Root.

Aggregate Root A has multiple owners

In a situation where you may have some Aggregate Root such as a FinancialAccount that may have more than one owner, the owner would need to define the relationship.

public FinancialAccount(Guid id, string type)

{

Id = id;

Type = type;

}

public Guid Id { get; private set; }

public string Type { get; private set; }

public decimal Balance { get; private set; }

public void Debit(decimal amount)

{

Balance += amount;

}

public void Credit(decimal amount)

{

Balance -= amount;

}Financial accounts may be related to some ConsumerAgreement or by another Aggregate Root such as an Invoice:

public class RelatedFinancialAccount

{

public Guid Id { get; private set; }

public string Description { get; private set; }

public RelatedFinancialAccount(Guid id, string description)

{

Id = id;

Description = description;

}

}

public class ConsumerAgreement

{

public Guid Id { get; private set; }

public string CustomerName { get; private set; }

private readonly List<RelatedFinancialAccount>

_financialAccounts = new List<RelatedFinancialAccount>();

public ConsumerAgreement(Guid id, string customerName)

{

Id = id;

CustomerName = customerName;

}

public void OnAddFinancialAccount(

RelatedFinancialAccount financialAccount)

{

_financialAccounts

.Add(financialAccount);

}

}The Invoice would have a similar structure with its \linebreakRelatedFinancialAccount list of Value Objects.

Keep in mind that no AR may contain an instance reference to another Aggregate Root, so a ConsumerAgreement may not keep a list of FinancialAccount instances but rather a list of the related Aggregate Root identities or a list of Value Objects representing the related Aggregate Root.

Constructor seems to be getting somewhat long

Keep in mind that an Aggregate Root or Entity should always be valid. This does not mean that it should always be complete.

You can go as far as having an empty constructor as long as that means the entity is valid. In general, one would need to add that shape to the object that at least, probably, uniquely identifies the object or, at least, something that gives it meaning.

After this, you can invoke command- or event-style methods to populate the entity:

public class Customer

{

public Customer(Guid id, string name)

{

Id = id;

Name = name;

}

public Guid Id { get; private set; }

public string Name { get; private set; }

public string Address { get; private set; }

public CustomerMoved Move(string address)

{

return On(new CustomerMoved

{

Address = address

});

}

public CustomerMoved On(CustomerMoved customerMoved)

{

Address = customerMoved.Address;

return this;

}

}

public class CustomerMoved

{

public string Address { get; set; }

}In order to get your constructor length under control, you may need to consider splitting your class into more manageable parts, or you could opt to group some of the properties and behavior into Value Objects that are passed into the constructor.

Do not inject anything into aggregates

No dependency such as a service or repository should ever be injected into an aggregate either by way of the constructor or any other form such as the more insidious property injection.

Avoid using repositories/queries in aggregates (double dispatch)

One of the suggested techniques you are likely to encounter is to pass a required service or repository dependency into a method call. Try to avoid that if at all possible.

Calculation Example

Whenever a repository is required, the generally accepted approach is to make use of double dispatch. The domain is comprised of aggregates, entities, and value objects in order to encapsulate the behavior. All those objects should ideally either have the data they require internally when invoking a behavior, or the required data should be passed to it.

If there is no way to determine the data from outside the entity and you absolutely have to use double dispatch, then it would be fine. However, consider the following example:

public void Calculate(ICalculationValueQuery query)

{

_internalValue = _internalValue + query.GetValue(_idOfValueRequired);

}When we have to test the above method, we will need to mock the ICalculationValueQuery object in order to pass in the value. What the domain is actually interested in is the following:

public void Calculate(decimal value)

{

_internalValue = _internalValue + value;

}I am certain that when we go back to the ubiquitous language, we will probably find that the above behavior is described along the following lines:

There are times when we can calculate something on the

Widgetusing a value.

When we truly do need to use double dispatch, it may be a good idea to facilitate testing using a combination of the above:

public void Calculate(ICalculationValueQuery query)

{

Calculate(query

.GetValue(_idOfValueRequired)

);

}

public void Calculate(decimal value)

{

_internalValue = _internalValue + value;

}Discount Example

It may also be that your design can be optimized by making the result of the required call part of the aggregate's state.

We should pay close attention to the ubiquitous language here and especially look out for the word "when":

There are various mechanisms that we use to determine whether a customer qualifies for discount. When the customer does have a discount all qualifying items such as orders and vouchers have to include the discount.

To implement the requirement, we can make use of an event-driven architecture using messaging to notify the relevant Bounded Context of the change. Let's assume that we store customer discount information in another aggregate and, therefore, in another database table. The discount, however, is valid only until a given date. We could have something such as this:

public class Customer

{

public Guid Id { get; private set; }

public Customer(Guid id)

{

Id = id;

}

public void ApplyDiscount(IDiscountVisitor visitor, ICustomerDiscountQuery query, DateTime date)

{

visitor.OnDiscountReceived(

query.GetDiscount(Id, date));

}

}The above example is somewhat simplistic since the only Customer state we are using is the Id. However, it may be that we need to use more of the internal state. As mentioned, an alternative may be to store the discount as a Value Object in the Customer:

public class Order : IDiscountVisitor

{

public void OnDiscountReceived(decimal percentage)

{

}

}

public class Voucher : IDiscountVisitor

{

public void OnDiscountReceived(decimal percentage)

{

}

}

public class Customer

{

public class Discount

{

private decimal Percentage { get; }

private DateTime _expiryDate = DateTime.MinValue;

public Discount(decimal percentage, DateTime expiryDate)

{

Percentage = percentage;

_expiryDate = expiryDate;

}

public bool Expired(DateTime date)

{

return _expiryDate < date;

}

}

public Guid Id { get; private set; }

private Discount _discount;

public Customer(Guid id)

{

Id = id;

}

public void OnDiscountReceived(decimal percentage, DateTime expiryDate)

{

_discount = new Discount(percentage, expiryDate);

}

public void ApplyDiscount(IDiscountVisitor visitor, DateTime date)

{

if (_discount == null || !_discount.Expired(date))

{

return;

}

visitor.OnDiscountReceived(

_discount.Percentage);

}

}To wire this together, a change in the discount status would publish an event that would be processed by the relevant handler to update the discount available to the customer. This would be the most appropriate, and possibly only, solution when dealing with distinct and separate Bounded Contexts where the discount is not managed in the customer's Bounded Context.

Avoid using services in aggregates (double dispatch)

As is the case with repositories, it may seem as though we need to use double dispatch when using a service. However, when doing so we are coupling on a behavioural level. This should probably be handled by a dedicated domain service.

For example, we may have a somewhat simplified EMailService:

public class EMailService : IEmailService

{

public void Send(EMailMessage message)

{

new SmtpClient().Send(new SmtpMessage(message.Recipient, message.Body, message.IsHtml);

}

}If we need to send an e-mail for an order, we could go with the following on the Order class:

public void SendEMail(IEmailService service)

{

service.Send(new EMailMessage(_contactEMail, "Hello, your order has been shipped.", false));

}It may not be the best idea to have an order know how to e-mail itself. However, we could use the Order as a factory for an EMailMessage:

public EMailMessage EMailMessage()

{

return new EMailMessage(_contactEMail, "Hello, your order has been shipped.", false);

}This may still not be ideal as we may not wish to pull the e-mail generic subdomain into our core domain. In fact, if we later decide that we could text the customer, things would need to be refactored somewhat. We could rather either return the required data or pass in some generic object that we need populated:

public ContactDetails ContactDetails()

{

return new ContactDetails(_contactEMail, _contactPhone, _deliveryAddress);

}

public void ApplyNotification(IOrderNotificationVisitor visitor)

{

visitor.EMailAddress = _contactEMail;

visitor.Phone = _contactPhone;

visitor.DeliveryAddress = _deliveryAddress;

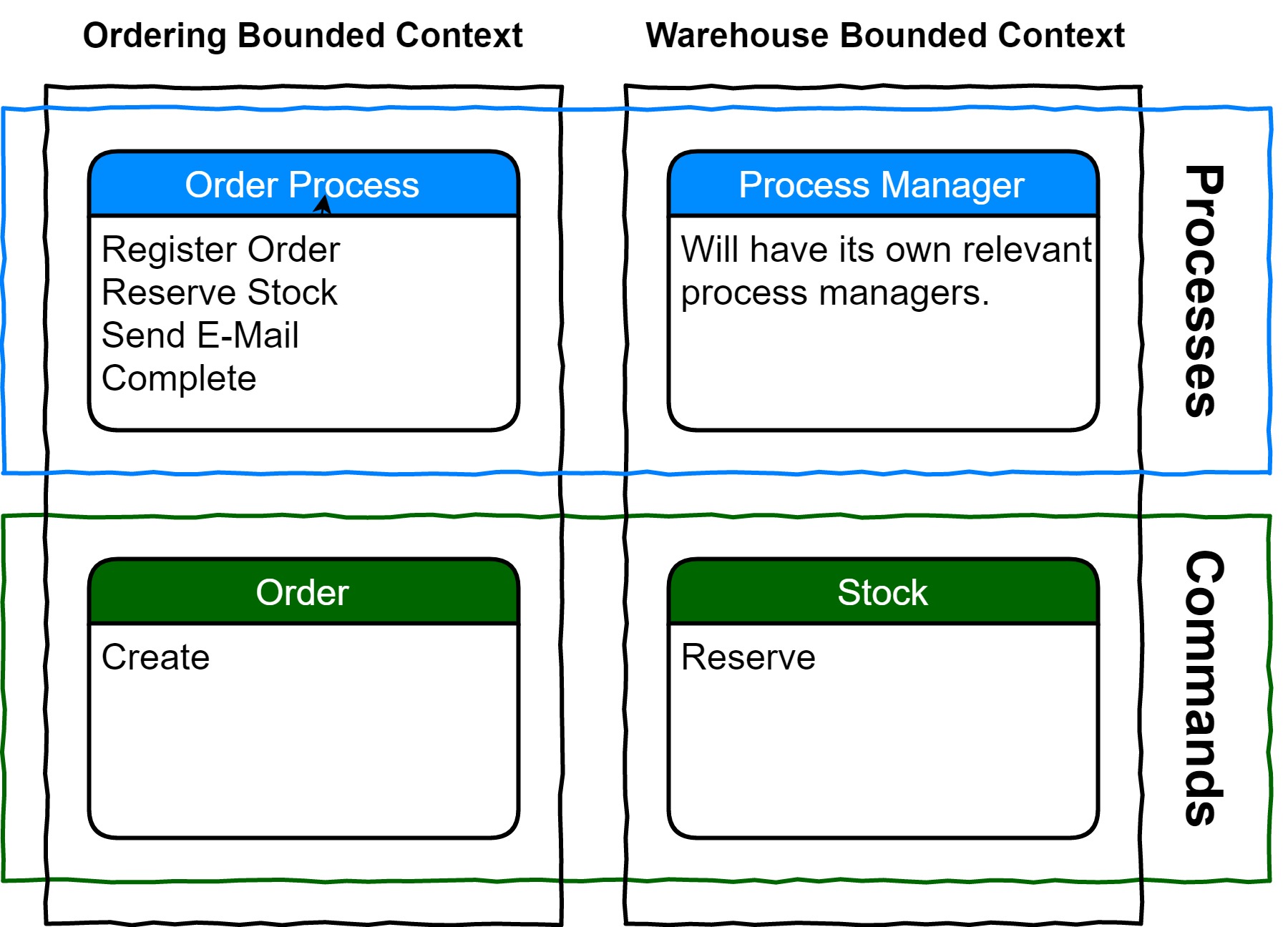

}In a more mature environment, we may have a dedicated e-mail handling endpoint and an order process management Bounded Context that orchestrates a multiple message exchange that would keep track of when to dispatch a SendEMailCommand for the order and then respond to the corresponding EMailSendEvent in order to continue the order process.

Apply changes to a single aggregate per transaction

If your data store does not support transactions, then this section does not apply.

Aggregates represent a consistency boundary. This does not necessarily equate to a database transaction boundary. However, every attempt should be made to perform changes to only a single aggregate within a database transaction. If you absolutely have to change more than one aggregate within a transaction, you should give it careful thought.

The reason that this is so important has to do with locking. For higher volume transactions, you may run into many more deadlock scenarios when manipulating more than one aggregate in a transaction. Rather attempt to break it into two distinct operations with one leading to the next. This will in all probability require a Process Manager for the orchestration.

There are definitely going to be times when you do need to change more than one aggregate within the same transaction. For instance, let's say we are going to Debit one of our FinancialAccount aggregates on our ConsumerAgreement and Credit another. It is quite conceivable that we can use a Process Manager to accomplish this but, on the other hand, we may need to keep it immediately consistent. You will come across the argument that in the real world the two accounts are typically held by different institutions, so eventual consistency is implied. However, in our scenario we are not transferring money between two different bank accounts but rather within our domain.

This boils down to how sensitive the data is to consistency. If you can get away with eventual consistency, then go with a Process Manager; else, for immediate consistency, you may need to bend the rules.

Persistence requirements for changes to aggregates

When not making use of event sourcing, you may find some instances where you would like to optimize your persistence by not persisting an entire aggregate. You may go as far as having use-case-specific methods on your repository: When not making use of event sourcing, you may find some instances where you would like to optimize your persistence by not persisting an entire aggregate. You may go as far as having use-case-specific methods on your repository:

public interface ICustomerRepository

{

Customer Get(Guid id);

void Save(Customer customer);

void Activated(Customer customer);

void DiscountPercentageReviewed(Customer customer);

}In this way, the repository may have finer control over the persistence.

An ORM usually tracks changes to objects using a very finely grained mechanism. There is nothing preventing us from sprinkling some hints into our code. When we have an Order with 100 OrderItem instances and we call the .Save(order) method on our OrderRepository, it has no choice but to save all those items. When we subsequently change a single order item or add a new one, how would our repository save those items? One option is to load all the items from the persistence store and compare them. Another option may be to have some property on our OrderItem such as IsAppended. When we regard our OrderItem as a value object, we can replace any changes with new items, and then when we call the .Save(order) method, our repository can loop through the order items and save only the appended items. We would need to still remove any items that have been altered. Here we can either keep a list on the order or add an IsRemoved onto the OrderItem.

This is all still persistence-ignorant since these hints are not part of any persistence mechanism but part of our domain code that tracks changes. You will need to make a call on how to deal with these persistence issues.

Always valid classes versus an IsValid() method

Keeping in mind that domain objects should always be valid, you may find yourself in a situation where it feels as though you may not have all the data to have your domain object in a valid state.

A typical reaction may be to add an IsValid() method. However, you need to ask yourself what that method does. In some instances, it is merely a case of not having a complete object. At some stage there is an expectation that it is complete, and we are tempted to ask the object whether it is so as in the following example:

public class Customer

{

public enum CustomerDiscounLevel

{

None,

Bronze,

Silver,

Gold

}

public Customer(Guid id, string name)

{

Id = id;

Name = name;

}

public Guid Id { get; private set; }

public string Name { get; private set; }

public decimal DiscountPercentage { get; set; }

public CustomerDiscounLevel DiscountLevel { get; set; }

public bool IsValid()

{

switch (DiscountLevel)

{

case CustomerDiscounLevel.Gold:

{

return DiscountPercentage > 15;

}

case CustomerDiscounLevel.Silver:

{

return DiscountPercentage > 10;

}

case CustomerDiscounLevel.Bronze:

{

return DiscountPercentage > 5;

}

}

return true;

}

}However, it becomes important to know when to ask. Should the repository ask? Also, we may know that the customer isn't valid, but we don't know why. Having those setters exposed is also troublesome.

If we attempt to use the Tell Don't Ask approach, we could tell the object that it is in a certain state, and if it isn't the case, it can throw an exception. For instance:

public enum CustomerDiscounLevel

{

None,

Bronze,

Silver,

Gold

}

public Customer(Guid id, string name)

{

Id = id;

Name = name;

}

public Guid Id { get; private set; }

public string Name { get; private set; }

public decimal DiscountPercentage { get; private set; }

public CustomerDiscounLevel DiscountLevel { get; private set; }

public void Gold(decimal discountPercentage)

{

if (DiscountPercentage <= 15)

{

throw new DomainException("A gold customer must receive a discount of more than 15%.");

}

DiscountPercentage = discountPercentage;

DiscountLevel = CustomerDiscounLevel.Gold;

}

public void Silver(decimal discountPercentage)

{

if (DiscountPercentage <= 10)

{

throw new DomainException("A silver customer must receive a discount of more than 10%.");

}

DiscountPercentage = discountPercentage;

DiscountLevel = CustomerDiscounLevel.Silver;

}

public void Bronze(decimal discountPercentage)

{

if (DiscountPercentage <= 5)

{

throw new DomainException("A bronze customer must receive a discount of more than 5%.");

}

DiscountPercentage = discountPercentage;

DiscountLevel = CustomerDiscounLevel.Bronze;

}In this way, we have not only removed the IsValid() method, but we have also made our domain more expressive.

Don't perform set-based validations in the model

If you have some property that spans an entire set, you should leave that up to the infrastructure to enforce. A typical example is a unique constraint such as a unique username or e-mail. These are better handled by infrastructure such as a database index.

You could perform an initial query to check for uniqueness before accepting the command, but it is quite possible that a conflict may arise a couple of steps later.

Using Aggregates as factories for other Aggregates

We are all familiar with creating instances of classes:

// new keyword

var paint = new Paint("Blue");

// System.Activator

var paint = System.Activator

.CreateInstance(typeof(Paint), "Blue");

// factory

var factory = new PaintFactory();

var paint = factory.Create("Blue");

// factory methods

var paint = Paint.Create("Blue");I have not seen the factory pattern used very often, and it may have something to do with dependencies. In most instances, the constructor will suffice. Your mileage may vary.

Creating any object requires input data, and the constructor typically requires some basic, mandatory, data that will place the object in some identifiable and usable state. To this end, one could use one Aggregate Root as the factory for another:

var blue = new Paint("Blue");

var green = blue.Mix("Yellow");The example above is using a rather simple scenario, and in all probability those objects are Value Objects. Also, the fact that the result of the Mix operation is another Paint object indicates a closure under the operation since all the members involved in the operation are of the same set. In most scenarios this will not be the case:

var cart = new ShoppingCart();

cart.Add("Socks", 1);

cart.Add("Coffee Table", 1);

cart.Add("Ping Pong Bat", 2);

var customer = customerRepository.Get("the-customer");

var order = cart.Order(customer);

orderRepository.Save(order);Here we create an Order from the ShoppingCart by providing the Customer that the Order is for. This technique is only going to work when the Aggregate Root containing the factory method has access to all the Aggregate Roots involved. You may immediately be thinking how that would work since Bounded Contexts should not be referencing each other. The way you'd need to do this is to perform this type of operation using a separate Bounded Context or integration layer that is used to compose the constituent Bounded Contexts, or even from a Value Object that represents an Aggregate Root from another Bounded Context.

Aggregate could contain a list with thousands of entries

At times, it seems as though your Aggregate Root is going to become too large to load into memory since it contains collections that are huge. The very first thing to remember is that your domain model should not be used for querying. We do not want to load an Aggregate Root for the sole purpose of displaying data. If, however, we have access to an Aggregate Root instance that happens to expose the data we require, then we could use the instance, but chances are that is only going to work in instances where we are working with the specific Aggregate Root anyway. If you do go with this option, do not be tempted to have your domain code return any UI elements.

Next, you have to ask yourself whether you truly need to load the entire collection of related objects by keeping in mind that the main purpose of an Aggregate Root is to enforce invariants and consistency. If you require the collection to enforce uniqueness, rather use your data store to do that. Your domain is not the best place for set-based operations.

Also keep in mind that if the collection is not necessarily going to be contributing to enforcing any invariants, then you do not need to load it. Historical data is probably not going to be required.

Let's take a hypothetical requirement that a Customer may have a maximum of 5 active orders at any one time. Here we can immediately use a collection of ActiveOrder Value Objects. We may even keep only an ActiveOrderCount on our Customer that is kept up to date when order statuses are changed.

We may even want to check the invariant on the Customer:

public class Customer

{

private readonly List<ActiveOrder> _activeOrders = new List<ActiveOrder>();

public void AddActiveOrder(ActiveOrder order, int maximumActiveOrderCount)

{

if (_activeOrders.Count >= maximumActiveOrderCount)

{

throw new DomainException("Cannot add another active order.");

}

_activeOrders.Add(order);

}

}Structuring Aggregate Roots

Given that an Aggregate Root acts as a self-contained unit, one could design the classes to represent that fact. If we take the Order / OrderItem example, a rather traditional approach may be to design two disparate classes:

public class Order

{

public Guid Id { get; }

public string Customer { get; }

public DateTime DateRegistered { get; }

private List<OrderItem> _items = new List<OrderItem>();

public Order(Guid id, string customer, DateTime dateRegistered)

{

Id = id;

Customer = customer;

DateRegistered = dateRegistered;

}

public Order OnAddItem(OrderItem item)

{

_items.Add(item);

return this;

}

public OrderItem GetItem(string product)

{

var result = _items.Find(item => item.Product.Equals(product));

if (result == null)

{

throw new DomainException($"Could not find an item for product '{product}' in order with id '{Id}'.");

}

return result;

}

// mutable approach (A)

public OrderItem AddQuantity(string product, int count)

{

GetItem(product).Add(count);

}

// immutable approach (B)

public OrderItem AddQuantity(string product, int count)

{

var item = GetItem(product);

_items.Remove(item);

// perhaps create a new item here

var result = new OrderItem(product, item.Cost, item.Quantity + count)

OnAddItem(result);

// or use a factory method (C)

var result = item.Add(count);

OnAddItem(result);

return result;

}

}

public class OrderItem

{

public string Product { get; }

public decimal Cost { get; }

public decimal Quantity { get; }

public OrderItem(string product, decimal cost, decimal quantity)

{

Product = product;

Cost = cost;

Quantity = quantity;

}

// mutable approach (A)

public OrderItem Add(int count)

{

quantity += count;

return this;

}

// immutable approach (B) with factory method (C)

public OrderItem Add(int count)

{

return new OrderItem(Product, Cost, Quantity + count);

}

}The above illustrates some techniques that you may be familiar with or have seen before. However, when the contained OrderItem is structured in this manner, it seems somewhat overly exposed.

Another option that makes the association more apparent would be to use nested classes and even a nested interface to allow access to some internals of the Item class to only the Order class:

public class Order

{

public Guid Id { get; }

public string Customer { get; }

public DateTime DateRegistered { get; }

private List<Item> _items = new List<Item>();

public Order(Guid id, string customer, DateTime dateRegistered)

{

Id = id;

Customer = customer;

DateRegistered = dateRegistered;

}

public Order OnAddItem(Item item)

{

_items.Add(item);

}

public Item GetItem(string product)

{

var result = _items.Find(item => item.Product.Equals(product));

if (result == null)

{

throw new DomainException($"Could not find an item for product '{product}' in order with id '{Id}'.");

}

return result;

}

// mutable approach (A)

public OrderItem AddQuantity(string product, int count)

{

GetItem(product).Add(count);

}

// immutable approach (B)

public OrderItem AddQuantity(string product, int count)

{

var item = GetItem(product);

_items.Remove(item);

// perhaps create a new item here

var result = new OrderItem(product, item.Cost, item.Quantity + count)

OnAddItem(result);

// or use a factory method (C)

var result = item.Add(count);

OnAddItem(result);

return result;

}

public class Item : IItem

{

public string Product { get; }

public decimal Cost { get; }

public decimal Quantity { get; }

public Item(string product, decimal cost, decimal quantity)

{

Product = product;

Cost = cost;

Quantity = quantity;

}

// mutable approach (A)

public Item IItem.Add(int count)

{

quantity += count;

return this;

}

// immutable approach (B) with factory method (C)

Item IItem.Add(int count)

{

return new OrderItem(Product, Cost, Quantity + count);

}

}

private interface IItem

{

Item Add(int count);

}

}In this way, all access to the contained class is through the Aggregate Root. This is something we need to strive for since any changes made to the contained objects may invalidate the Aggregate Root. Since Value Objects should be immutable, this may be a moot point, but in cases where you need to restrict access to methods within a contained object, this technique certainly will solve that problem.

We can take this a step further and use a private class and public interface in order to prevent external instance creation. We could still use a private interface, as above, to prevent external access to internals that are meant only to be accessed by the containing Aggregate Root class:

public class AggregateRoot

{

private readonly List<IPublic> _items = new List<IPublic>();

public IEnumerable<IPublic> Items()

{

return new ReadOnlyCollection<IPublic>(_items);

}

private interface IPrivate

{

IPublic AccessibleOnlyByAggregateRoot();

}

public interface IPublic

{

void SomethingAnyoneCanCall();

}

private class ContainedClass : IPublic, IPrivate

{

public ContainedClass()

{

// can only be instantiated by AggregateRoot

}

public void SomethingAnyoneCanCall()

{

}

IPrivate

.AccessibleOnlyByAggregateRoot()

{

}

}

}Another point to remember when structuring Aggregate Roots is that one Aggregate Root may not reference another Aggregate Root. Now, this does not mean that one Aggregate Root may never hold an instance of another Aggregate Root, but rather that we do not want to rely on a deep object graph when retrieving an Aggregate Root.

For instance, if an Order and a Customer are both Aggregate Roots, then the following is most certainly not a good idea:

public class Order

{

public Guid Id { get; }

public Customer Customer { get; }

public Order(Guid id, Customer customer)

{

Id = id;

Customer = customer;

}

}This leads to all sorts of problems. We would not be able to retrieve the order without having a customer object. Where would this customer object come from? Who has the responsibility to retrieve the customer object? Also, when we persist the order, who has the responsibility to persist the customer?

These are the types of problems we solve by ensuring that we have hard edges around our Aggregate Roots.

We may opt to rather only store some Value Object that contains data that belongs to the order in any event. This data should be denormalized into the order:

public class Order

{

public Guid Id { get; }

public CustomerReference CustomerReference { get; }

public Order(Guid id, CustomerReference customer)

{

Id = id;

Customer = customer;

}

public class CustomerReference

{

public Guid Id { get; }

public string Name { get; }

public CustomerReference(Guid id, string name)

{

Id = id;

Name = name;

}

}

}The relationship is now clearer, and we can see that we are dealing with only a reference to a customer. Had we stored only the CustomerId, that too would suffice, but there is nothing preventing us from denormalizing the customer's name into the order.

Just a note on file size: although we should strive toward keeping things small and manageable, there is a difference between file size and class and method sizes. For instance, once we opt to include some Value Objects within our Aggregate Root class as nested classes, the Aggregate Root class will be bigger. Moving that nested class out may decrease the size of the file but changes little in respect of the design. It may even make it less fluid. One technique you may wish to employ should your file seem rather unwieldy is to use a partial class in a separate file for your Aggregate Root and then place the nested class in that file.

Invariants

We should be adding all the required invariants into our domain model. Some rules may require configurable values, and these we can pass in when performing the relevant command. An Aggregate Root cannot do much about factors external to the domain model, and you should be cognizant of this fact so that you do not attempt to place every requirement into your domain model. Some invariants may seem as though they belong in your Bounded Context, but ensure that you are not trying to implement an invariant in the wrong Bounded Context.

An example of an external requirement is authorization. I tend to think about a domain model in terms of a physical calculator. It has a keypad with digits, some operators, and a display. If I decide that I want to secure my calculator, I cannot expect my calculator to do so. Anyone gaining access to my calculator can start performing operations provided by the domain model.

Your domain model should be focused on implementing the business requirements and not any infrastructure, security, performance, or other requirements. If it can aid in achieving these external requirements, then that is first prize, but it should be providing deterministic business functionality in a reliable way.

Any integration layer interacting with the domain has the responsibility to ensure that only authorized actions are permitted.

Invariants involving many Aggregate Roots

An example may be that we cannot create an Order for a Customer that has a status set to Inactive. The first thing to remember is that it is extremely hard to design any software that attempts to prevent a developer from performing a certain task, and this should not be your goal. It should be a fluid experience to do the right thing, and it should be painful to do the wrong thing. If you can design your software in this way, then any developers making use of your domain model should not be tempted to implement hacks.

To this end, it may be near impossible to prevent a developer from creating a new instance of an Order even if our invariant states that we may not create an Order for a Customer with an Inactive status. There are some techniques we could employ:

Using the owning Aggregate Root as the factory

As mentioned elsewhere, this technique is only going to work when both Aggregate Roots are available.

public class Customer

{

// implementation details

public Order CreateOrder(DateTime orderDate)

{

if (_status == CustomerStatus.Inactive)

{

throw new DomainException("Cannot create an order for an inactive customer");

}

return new Order(this.CustomerId, orderDate);

}

}Enforcing the invariant on a state change

The invariant can be enforced using either primitives, an explicit role-based interface, using the owning Aggregate Root, or using a rule container:

public class Order

{

// implementation details

public Order(DateTime orderDate)

{

_orderDate = orderDate;

}

// option (1): state change using primitives

public Order Place(Guid customerId, CustomerStatus status)

{

if (status == CustomerStatus.Inactive)

{

throw new DomainException("Cannot create an order for an inactive customer");

}

_customerId = customerId;

_orderStatus = OrderStatus.Placed;

}

// option (2): explicit role-based interface

public Order Place(IOrderCustomer orderCustomer)

{

if (orderCustomer.Status == CustomerStatus.Inactive)

{

throw new DomainException("Cannot create an order for an inactive customer");

}

_customerId = orderCustomer.CustomerId;

_orderStatus = OrderStatus.Placed;

}

// option (3): using the owning Aggregate Root

public Order Place(Customer customer)

{

if (customer.Status == CustomerStatus.Inactive)

{

throw new DomainException("Cannot create an order for an inactive customer");

}

_customerId = customer.Id;

_orderStatus = OrderStatus.Placed;

}

// option (4): using the owning Aggregate Root as the rule container

public Order Place(Customer customer)

{

customer.PlaceOrder(order); // <- invariant will throw in here

_customerId = customer.Id;

_orderStatus = OrderStatus.Placed;

}

// option (5): using a rule container

public Order Place(IOrderInvariants invariants)

{

invariants.PlaceOrder(order); // <- invariant will throw in here

_orderStatus = OrderStatus.Placed;

}

}Once again, we can see that using the owning Aggregate Root would require access to both should they be in separate Bounded Contexts.

We could, of course, decide that our application will enforce this rule, but we probably want to get it as close to our domain as possible in order to make it apparent. Security concerns are typically applied in the application layer and will not be a domain concern unless you are actually implementing a security-based Bounded Context.

External invariants

Some invariants may be "twice removed" (or more). Your domain proper within your Bounded Context cannot do much about these. Typically, these invariants will apply to a process, and you can elevate the enforcement thereof to the process manager itself. The invariants will become part of the process manager Bounded Context related to this Bounded Context. For instance, if we have an OrderManagement Bounded Context, it will mostly be involved with domain objects within this Bounded Context. However, to implement process managers related to orders, we may opt for an OrderProcessManagement bounded context that is also aware of interactions with other Bounded Contexts through, say, a messaging infrastructure.

Always valid vs complete

In general, Domain-Driven Design practitioners are of the opinion that all domain objects should be valid at all times. However, it is conceivable that we have different stages to the completeness of our entities that change the validity. This is especially true of most objects involved in a process. If we consider our process manager to be a first-class citizen in its owning Bounded Context, then there may be various statuses involved throughout the lifecycle of the process manager.

Each of the statuses may require a different set of data and, as such, we can check the invariants when moving to the next status. Again, we can check the invariants in various ways when we do our call to the GoToThisStatus() method.

Apply invariants at the correct level

As can be seen from the examples given thus far, we should strive to apply invariants where they are needed. If we consider something like a rule engine things can get a bit hairy. In general, you should really only be using a rule engine on the peripheral edges of your business. You are probably going to be getting the most mileage by using it when integrating with entities outside of your business. It is a kind of generic Anti-Corruption Layer to prevent distorted data from making its way into your business systems. On that note, you may hear the argument that you can define a rule once and use it in multiple places. This may be so, but you have to ask yourself why you would want to apply the same rule in multiple places. Most invariants belong in a single place.

Just as we have a system of record for data, we may think of our invariants in the same way. There is an authoritative bounded context that is concerned with a particular invariant. Anything applying something along the lines of the invariant outside of that space should be there purely for convenience. This is the same for front-end validation. We should never trust any provider talking to our domain as necessarily providing 100% valid data. An outside bit of code can act as the gatekeeper regarding access to the domain by way of authorization, but that is about as far as it goes.

Unique items in collection (e.g. e-mail address)

In general, you should not attempt to perform set-based validations within the domain, as domain entities are focused on individual invariants or small, localized, sets of entities or value objects within an Aggregate Root. The most common example of this is some unique constraint such as a Username or EMailAddress, but any key would qualify.

Only throw DomainException in domain

In most cases, you should use a well-defined exception class along the lines of DomainException or BusinessException to identify invariants.

Entities

Using an "Identifier" class

There really should not be any need for anything in the line of an Identifier class or a base Entity or AggregateRoot class. If we do make use of a framework of sorts, it should be as non-intrusive as possible. It is quite conceivable that we may have different identifiers for different entities depending on their use. Typically, we would use the same key format, but we may have to upgrade an existing legacy system iteratively, and it could be using auto-incrementing int keys, whereas we may decide to go with Guid keys going forward.

From time to time, you may run across something like this:

public interface IIndentifier<T>

{

T Key { get; set; }

}The above really does not pay much rent, and I suggest foregoing it altogether. Implement the requisite key directly on your domain object.

Auto-incrementing Ids vs Guids

While on the subject of identifiers, we could compare the pros and cons of int keys versus Guid keys. Some folks are of the opinion that int keys are faster than Guid keys, but I would suggest that this assertion be tested with a good number of records. With the speed of today's data stores, I have not found any noticeable difference in performance. Inserting new data may lead to a slight increase in overhead with Guid keys as they do take up more space and would lead to more index page splits. But with decent reindexing strategies in place, it all boils down to the low-level read IO.

A major disadvantage I find with auto-incrementing keys is that we cannot assign them up front, so it usually requires a read of sorts after an insert to get the newly assigned key. For example, when using SQL Server one could execute the following:

insert into MyTable

(

ColumnOne,

ColumnTwo

)

values

(

@ColumnOne,

@ColumnTwo

);

select scope_identity()After getting the result from, say, some ExecuteScalar type call, we need to assign that Id to the entity and/or use it with a dependent object to create the association.

When using a Guid, we can assign it up front and use it with any dependent object also. It would only require an insert and no subsequent read. This makes it particularly easy to associate data structures in code without relying on the data store to first generate the relevant identifiers.

Making a role explicit

Although there is typically very little sense in representing an Aggregate Root or Entity in terms of an interface, there are instances where interfaces may prove useful. A naive example may be some role such as IAttributeContainer that represents some behavior and/or data requirement on the implementer. In this way, we can have a domain object fulfill a specific role.

Value Objects

Value Objects are immutable structures that encapsulate specific data and may contain behavior. In particular, they are useful when representing an Aggregate Root or associative entity. When you need to represent some Aggregate Roots that belong to an upstream Bounded Context, it is possible to duplicate the required data in your own Bounded Context or to obtain the required data by means of an Anti-Corruption Layer. The structures are then represented in an immutable form in the guise of Value Objects in the relevant Bounded Context.

Shared Value Objects

I have run into scenarios where I have the exact same Value Object used by more than one Aggregate Root. You might think this strange, but in my calculation engine, I have something I call a Constraint. A Constraint has an Argument, a Name, and a Value. The Name represents the type of comparison that I would like to perform such as Equals, From, To, or NotEqualTo and can be extended as need be. The Value can be any applicable value. As an example, I may want to constrain the argument Age by applying constraint From with a value of 21.

I now have an Aggregate Root called Calculation that can contain a number of Constraint Value Objects. My Formula Aggregate Root can also contain a number of Constraint Value Objects. I store my Constraint Value Objects in a data store along with an OwnerName and an OwnerId. In this way, I can fetch the appropriate list of Constraint Value Objects.

One way to reconstitute the two Aggregate Roots applicable here along with the Constraint Value Objects would be to have each Repository build the Constraint objects itself. There would be duplication, and this is something we do not want. Having a ConstraintRepository seems odd as the Constraint is neither an Aggregate Root nor an Entity. The answer may lie in a ConstraintFactory that is used by both the CalculationRepository and the FormulaRepository. In keeping with the guideline that factories are useful in cases where building up the resultant object involves some complexity, we may opt to not use a factory if the Value Object is only one or two attributes. But it is up to you. Our ConstraintFactory could run off to the data store to fetch and build up the required Value Object or list of objects and return them to the caller.

However, the fact that you even have a shared Value Object may indicate something that you missed in your design. In my case, I simplified the structure down to only have a Formula and no more Calculation. The Calculation concept was more of a classification that could just as easily be represented by a Formula with some fancy footwork. Be on the lookout for this type of thing. Your design may need another look.

Structure vs Classification

In line with the Shared Value Object thinking, it is important to note that we should strive to not include classification in our structural design.